Abeer Banerjee

আবীর ব্যানার্জী

আবীর ব্যানার্জী

I am a PhD student with a research focus on computational imaging. My present affiliations are with AcSIR, CSIR-CEERI, Helmholtz Munich, and TUM. I have a bachelor's degree in Engineering from University of Calcutta. I was born and raised in Durgapur, West Bengal, India. Beyond my scholarly endeavors, I find great fascination in the fields of philosophy, sci-fi, politics, and photography.

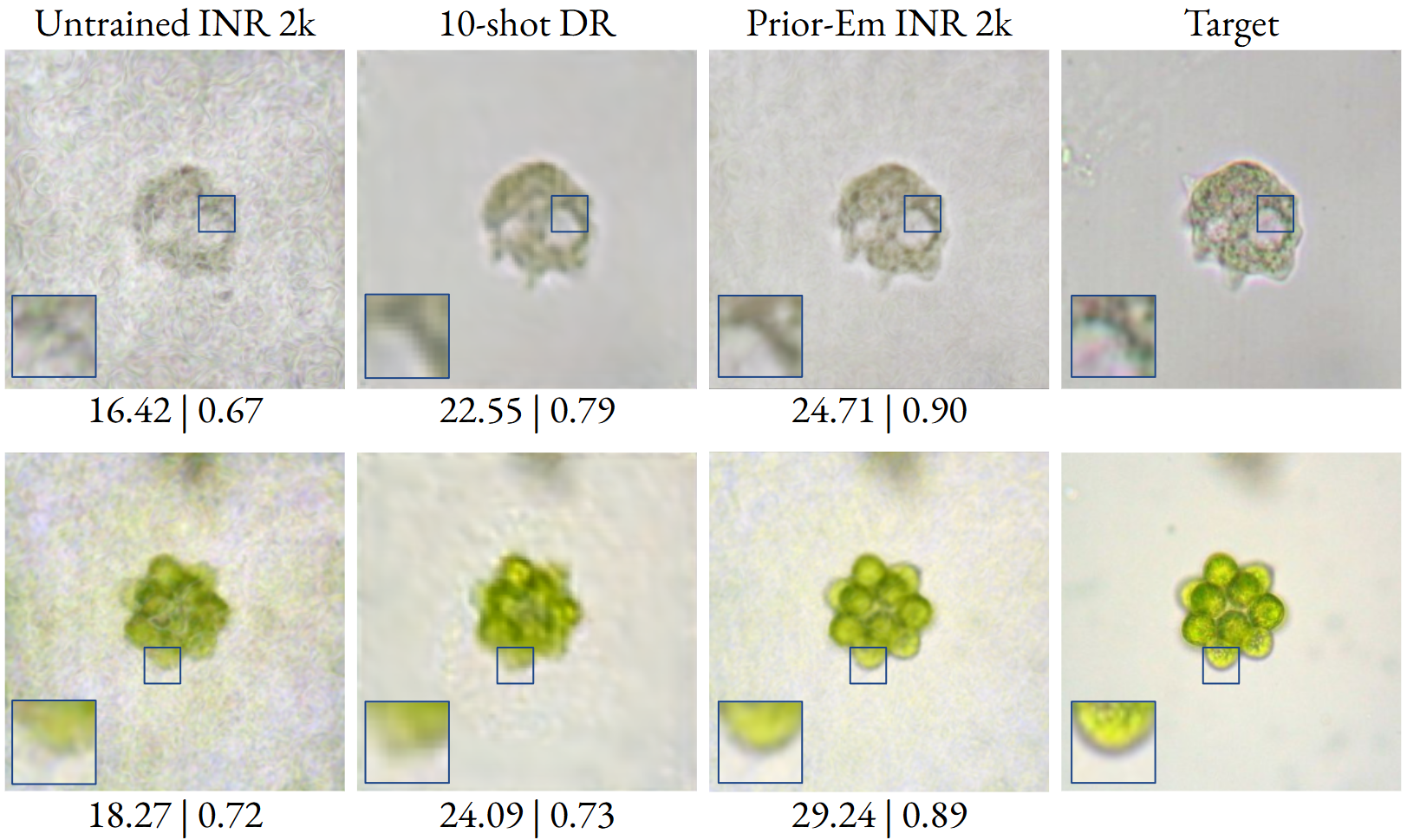

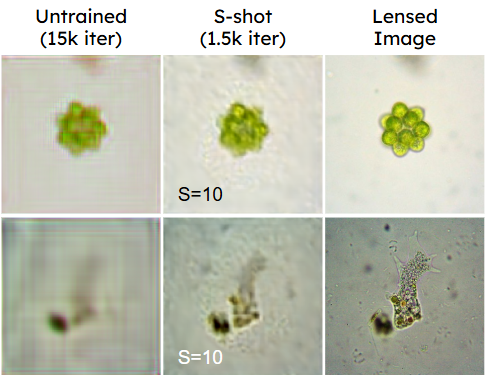

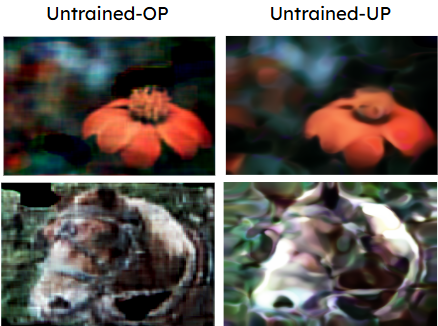

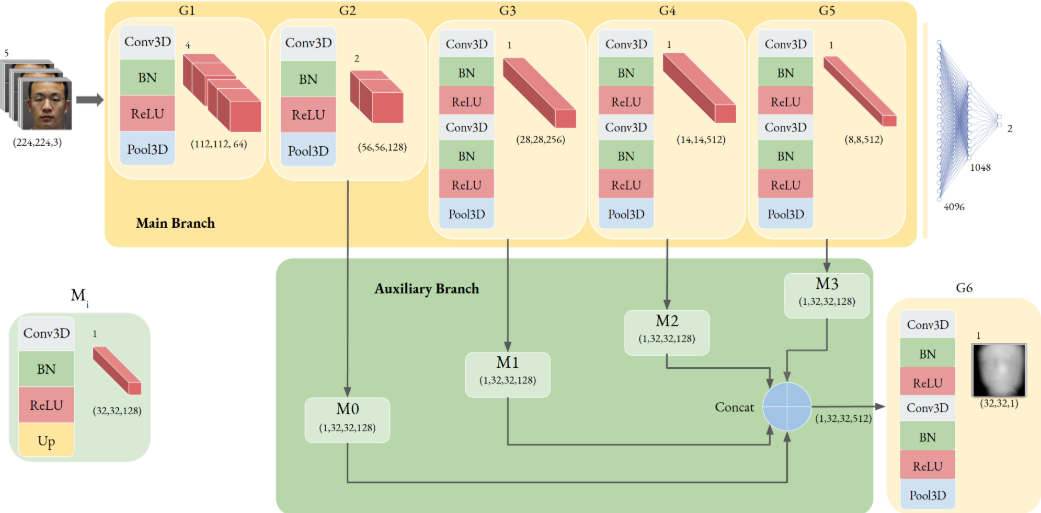

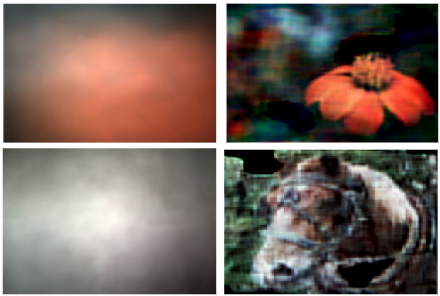

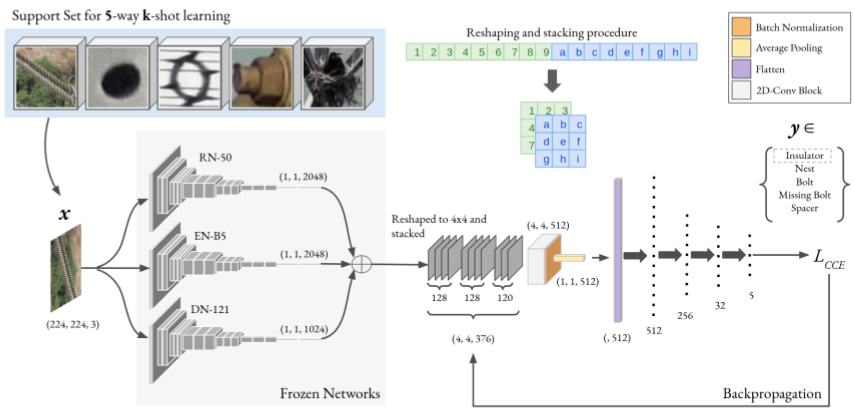

My research interests lie at the intersection of computational imaging, computer vision, and machine learning. I focus on computational image formation and reconstruction in low-data environments. Currently, I am working on the computational reconstruction of optoacoustic microscopic images. As a DAAD Bi-national PhD Fellow, I am under the joint supervision of Dr. Sanjay Singh and Prof. Vasilis Ntziachristos.